Disclaimer: our method of extraction was custom and may have missed some instances so some of the stats are more likely to be slightly conservative.

Three out of four S&P 500 companies have added new AI-related risks or updated existing ones in their most recent annual reports. This signals how widespread impacts of AI are on the majority of sectors within the economy but also how fluid this is amongst adoption and disruption.

Malicious Actors

One third of companies added or expanded risk concerning malicious actors using AI

178 companies added or expanded risk concerning malicious actors leveraging AI for coordinated attacks. Many companies are updating their language surrounding this risk from hypothetical to some they have directly experienced. Instead of the possibility of threat actors using AI, many companies confirm they are and even that they have been targetted. Companies using this more affirmative language include Gen Digital, Salesforce, Intel, Visa Inc.

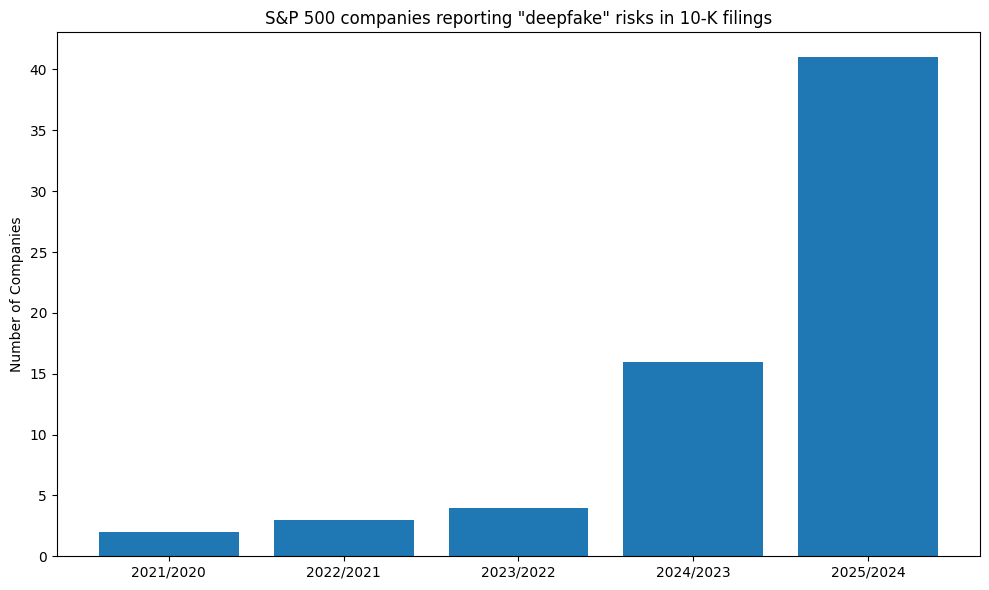

Companies mentioning deepfakes have doubled in number

Identity fraud is one of the most commonly occuring malicious uses of AI mentioned, specifically deepfakes. The number of companies mentioning deepfakes has more than doubled, rising from 15 to 39. This follows an exponential rise in use of the term over the past 5 years. The first companies in the S&P 500 to ever mention the term were Adobe and Marsh McLennan in 2019, 2 years following the coinage of the term by an eponymous Reddit user who shared deepfake porn. This is perhaps fitting interplay given that Adobe produces software that could be weaponised to build the technology whilst Marsh McLennan is an insurance broker that ideally has its eyes focussed on horizon for novel risks that could impact its business model. In its most recent report Marsh McLennan added recognition of the lower barrier to entry for malicious actors to effectively use AI.

Companies highlight deepfakes are often used to impersonate company leaders and employees. Ebay Inc. revealed that within the past year it was targeted unsuccessfully by hackers using AI-generated voice impersonation of a company leader. Fox mentioned that politicians and journalists are also target of deepfakes, which could ultimately “erode audience trust by making it difficult to determine what is real”.

Data Security

1 in 6 companies added or expanded the risk of proprietary data or intellectual property being exposed through interacting with AI systems

- 87 companies companies disclose the fact they are passing sensitive customer data through third party large language models, raising concerns it could be used to train their AI models.

- Companies mention they have sought protection from third party providers on data security

- Sherwin Williams notes than if sensitive data or intellectual property was leaked or taken through interacting with AI, there is an increased risk “existing intellectual property law may not provide adequate protection”

- Some companies, such as Microchip and Brown & Brown, limit their employees use of third party AI systems like ChatGPT although they also concede that internal governance can be challenging and employees may circumvent rules and use the systems anyway

- It also cuts both ways with companies worried they may be liable should another companies personal data be revealed to them via a language model or if the language model they use comes under attack for poor data practices

49 companies (nearly 10% of S&P 500) added or expanded discussion of risks concerning third-party providers of AI models and software

Energy

A quarter of utilities firms added reference to AI’s increasing energy requirements

9 of the 31 (29% / over a quarter of the) utilities firms in the S&P 500 added new reference to AI’s increasing energy requirements within their risk factors

Legislation

Mentions of the EU AI Act tripled

67 companies added mention of the EU AI Act in their risk factors, up from 22 in the previous year. It appears to be the most widely cited piece of AI legislation amongst S&P 500 companies.

The E.U. AI Act entered into force in August 2024, and its requirements will become effective on a staggered basis, beginning February 2, 2025. The E.U. AI Act will impose material requirements on both the providers and deployers of AI Technologies, with infringement punishable by sanctions of up to 7% of annual worldwide turnover or €35 million (whichever is higher) for the most serious breaches. Specific use-cases are flagged such as biometrics and the use of AI by law enforcement.

Within the US legislation is changing too. According to Dexcom, “AI laws and regulations have proliferated in the U.S. and globally in recent years, including in 2024. In 2024, there were 60 AI-related regulations at the U.S. federal and state levels, up from just one in 2016. In 2024 alone, the total number of AI-related regulations in the U.S. grew by 140%.”

Bias

Companies mentioning the risk of bias within datasets, algorithms and software has doubled

Companies are using an expanding vocabulary to signal the diverse ways in which AI fails to meet quality expectations: . The most widely cited quality shortcoming is the potential for bias, largely to be interpretted as models discriminating against certain subsets of customers. The number of companies flagging this risk within datasets, software and AI has more than doubled from 76 to 152.

Hype

1 in 10 companies added or expanded upon the risk of AI failing to deliver intended benefits, success or return on investment

Despite transformative technological advances in general AI capability, for many companies investing and using AI extensively, achieving a return on investment remains elusive. It presents a novel business model without an established track record. Their a clear incentive to say that its being used to appear innovative but quantifying gains difficult. 52 companies warn there can be no assurance that they will recoup their investments in AI or realise the intended benefits. This risk calls into question how much longer investments in AI can remain at present levels if companies experience prolongued uncertainty surrounding its anticipated benefits. Tracking risks like this could be useful in monitoring progression of the AI bubble.

This risk is distinct from the risk of not successfully realising AI and is more focussed on AI initiatives being successful. As T-Mobile observes in regards to the roll out of novel digital transformation initiatives including those that use AI: ‘even if we successfully deploy these capabilities, customer adoption and employee acceptance may be slower than anticipated, diminishing the expected improvements to efficiency, service quality, or revenue generation’.

References

Each of the above statistics was derived from the following selection of 10-K filing extracts.